Ich of the Following Best Describes a Goal of Cross-validation

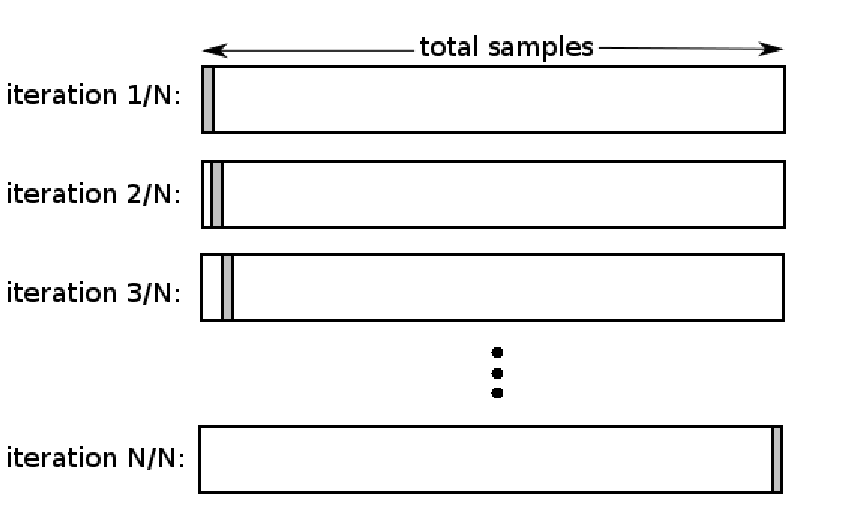

Use fold 1 as the testing set and the union of the other folds as the training set. Reserve some portion of sample data-set.

What Is Product Development The 6 Stage Process Asana

This requires only one cross-validation loop because it treats each point in the multi-dimensional grid independently.

. This method of cross validation is similar to the LpO CV except for the fact that p 1. That is to use a limited sample in order to estimate how the model is expected to perform in general when used to make predictions on data not used during the training of the model. The classic approach is to do a simple 80-20 split sometimes with different values like 70-30 or 90-10.

Cross-validation would be used for selecting the number of variables n and for tuning parameters α from a multi-dimensional grid n α where n 1 2 P and α α1 α2 αK. A Good Model is not the one that gives accurate predictions on the known data or training data but the one which gives good. Before we go into all of the ways that youre doing it wrong lets establish a clear definition of what exactly cross validation is.

Cross validation is a comparison of data from at least two different analytical methods reference method and test method or from the same method used by at least two different laboratories reference site and test site within the same study. Evaluating estimator performance. It allows you to check your model performance on one dataset which you use for training and testing.

However if the number of observations in the original sample is large it can still take a lot of time. The need for cross-validation. Steps for K-fold cross-validation.

In this strategy p observations are used for validation and the remaining is used for training. Cross-Validation where each point has equal chance of being placed into each fold. Those splits called Folds and there are many strategies we can create these folds with.

If you use a cross validation then you are in fact identifying the prediction error and not the training. Cross-validation is a technique in which we train our model using the subset of the data-set and then evaluate using the complementary subset of the data-set. We can do 3 5 10 or any K number of splits.

A model that would just repeat the labels of the samples that it has just seen would have a perfect score but would fail to predict anything useful on yet-unseen data. The highlighted questions are the questions you have missed. The three steps involved in cross-validation are as follows.

This International Conference on Harmonization ICH guidance addresses the choice of control group in clinical trials discussing five principal types of controls two important purposes of. Validation Exam Here is your test resultThe dots represent the choices you have made. Learning the parameters of a prediction function and testing it on the same data is a methodological mistake.

How to manually perform cross-validation for any model-metric combination. I think that this is best described with the following picture in this case showing k-fold cross-validation. Cross-validation is primarily used in applied machine learning to estimate the skill of a machine learning model on unseen data.

But it is not for model building since you want to exploit every single observation of the data you have in order to build a model to make prediction. According to Wikipedia exhaustive cross-validation methods are cross-validation methods which learn and test on all possible ways to divide the original sample into a training and a validation set. In cross-validation we do more than one split.

Remediation Accessed shows whether you accessed those linksN represents links not visited and Y represents visited links. The harmonization team has the following definition of cross validation. Back to Status page contains 6 Questions 1 Which of the following does NOT describe one of the.

The goal of cross-validation is to define a data set to test the model in the training phase ie. The advantage is that you save on the time factor. Improve your ML model using cross validation.

Stratified cross-validation Cross-Validation that biases fold selection so that some variables equally represent on each fold. Validation Exam Here is your test resultThe dots represent the choices you have made. The ultimate goal of a Machine Learning Engineer or a Data Scientist is to develop a Model in order to get Predictions on New Data or Forecast some events for future on Unseen data.

Using the rest data-set train the model. Cross validation is for model checking because it allows to repeatedly train and test on a single set of data if you had an unlimited amount of data you would not need cross validation at all. Each of the 5 folds would have 30 observations.

Cross-validation is a technique used to protect against overfitting in a predictive model particularly in a case where the amount of data may be limited. This chapter will introduce no new modeling techniques but instead will focus on evaluating models through the use of cross-validation. What is the purpose of cross validation.

It is also used to flag problems like overfitting or selection bias and gives insights on how the model will generalize to an independent dataset. Two types of exhaustive cross-validation are. Back to Status page contains 6 Questions 1 Which of the following does NOT describe one of the.

Remediation Accessed shows whether you accessed those linksN represents links not visited and Y represents visited links. Cross-validation is a comparison of validation parameters when two or more bioanalytical methods are used to generate data within the same study or across different studies. Validation data set in order to limit problems like overfittingunderfitting and get an insight on how the model will generalize to an independent data set.

How do you explain cross validation. Specifically we will discuss. The purpose of crossvalidation is to test the ability of a machine learning model to predict new data.

The highlighted questions are the questions you have missed. In cross-validation you make a fixed number of folds or partitions of the data run the analysis on each. Split the dataset into K equal partitions or folds So if k 5 and dataset has 150 observations.

It can be used as a means of determining inter-method equivalency or assessing inter-laboratory execution of the same method. The goal of cross-validation is to test the models ability to predict new data that was not used in estimating it in order to flag problems like overfitting or selection bias and to give an insight on how the model will generalize to an independent dataset ie an unknown dataset for instance from a real problem. Cross validation is the gold standard.

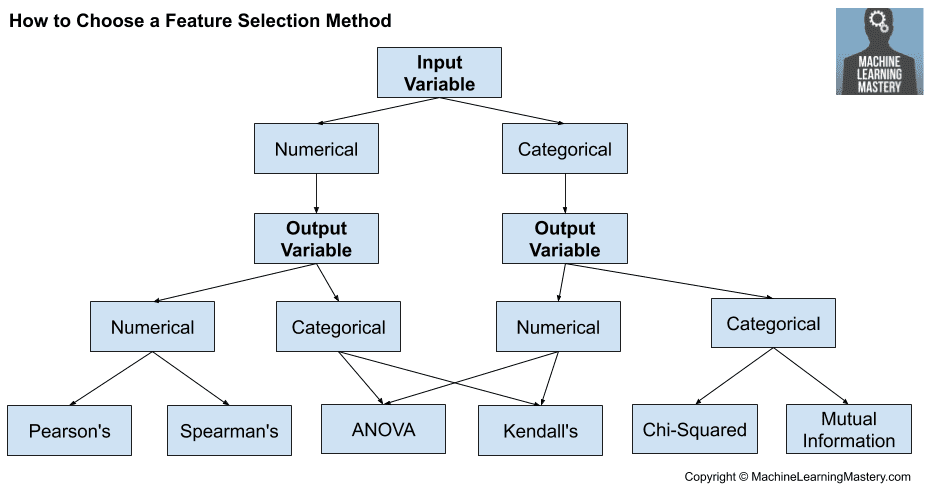

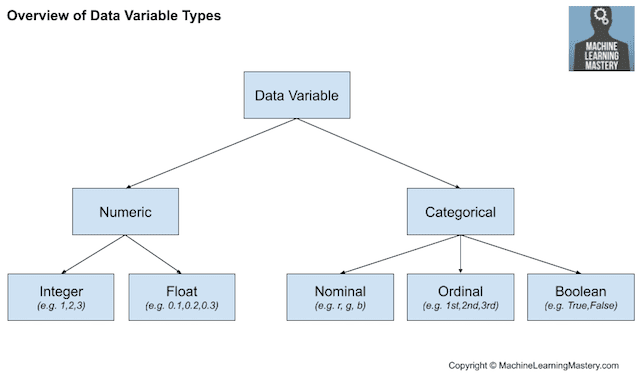

How To Choose A Feature Selection Method For Machine Learning

Cross Validation Explained Evaluating Estimator Performance By Rahil Shaikh Towards Data Science

How To Choose A Feature Selection Method For Machine Learning

Cross Validation Explained Evaluating Estimator Performance By Rahil Shaikh Towards Data Science

No comments for "Ich of the Following Best Describes a Goal of Cross-validation"

Post a Comment